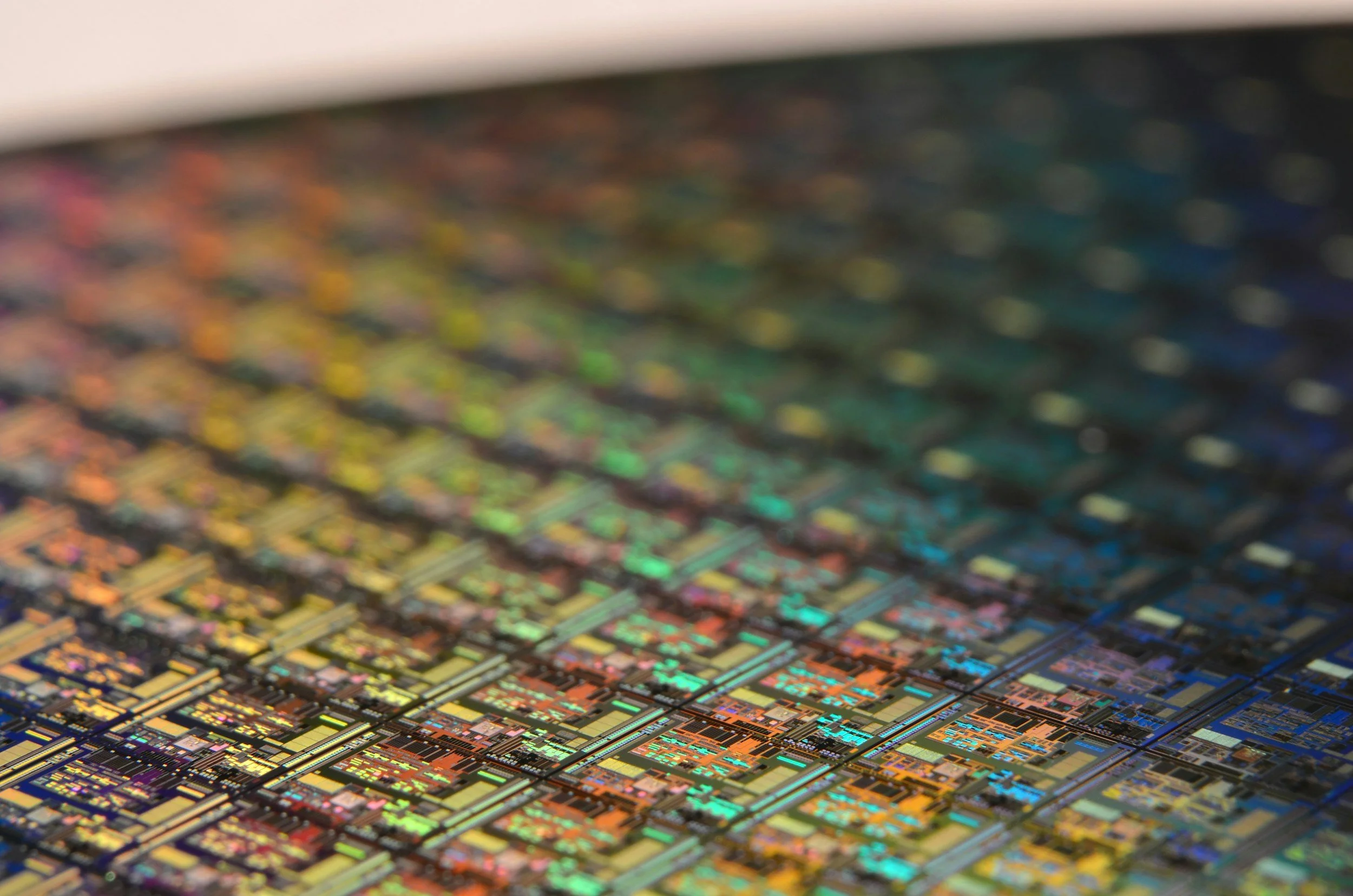

In 1999, Nvidia revolutionized the gaming industry with their GeForce 256 Graphical Processing Units. With the ability to elevate computer graphics to new heights, GPUs proved to be a quintessential device for enhancing the interactions between man and machine. It wasn’t until 2009 when a Stanford paper observed that the use of GPUs could open the floodgates of innovation in the emerging field of Artificial Intelligence.

Since then, the reliance on GPUs for artificial intelligence applications increased Nvidia's yearly revenue from roughly $3 billion to $61 billion, with forecasts of the overall market to increase to $110.6 billion by 2030. However, GPUs only represent the first step in Ai-centric hardware design.

An entirely new market blossomed of specialized hardware designed to increase cost efficiency and product capabilities in AI applications. These environments range from colossal data centers to humanoid robots to embedded microchips. Recently, OpenAI CEO Sam Altman made headlines with a $7 trillion proposal to increase innovation within specialized artificial intelligence devices.

In the rapidly evolving landscape of artificial intelligence (AI), hardware specialization has become a cornerstone for pushing the boundaries of what's possible. Here we explore the advancing field of AI-specialized hardware, including Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), Field Programmable Gate Arrays (FPGAs), and specialized chiplets, focusing on how to navigate this swiftly changing domain to optimize costs and unlock new use cases.

GPUs

Overview

For savvy business leaders looking to refine operational efficiency and foster innovation, leveraging GPUs (Graphics Processing Units) can be a game-changer in optimizing costs and enhancing decision-making processes. Unlike their CPU counterparts, which tackle tasks one at a time, GPUs excel in parallel processing, efficiently managing thousands of computations simultaneously. This unique capability is instrumental when dealing with the vast amounts of data typical in AI and machine learning workflows, significantly cutting down the time needed for data processing and analysis.

Pros

Accelerated Computing

Initially, the investment in GPU technology might seem significant, but the return on investment materializes through accelerated computation times and reduced need for extensive physical computing infrastructures. This not only lowers operational costs but also increases the agility of the business to adapt to market changes or explore new AI-driven opportunities. The same complex operation can be done with fewer GPU devices than CPU devices. In essence, for decision-makers aiming to place their enterprises at the forefront of innovation and efficiency, the strategic deployment of GPUs is not merely an option but a necessity in today's digitally driven marketplace.

Mature Development Environment

The ecosystem surrounding GPU technology has matured significantly, offering a robust development environment that enhances productivity and innovation. With comprehensive support from extensive libraries, frameworks, and toolkits such as CUDA for NVIDIA GPUs, developers have access to a wealth of resources that streamline the process of coding, testing, and deploying GPU-accelerated applications. This maturity not only reduces the learning curve for new developers but also facilitates the rapid prototyping and iteration of AI models and data-intensive applications. It will be much easier to staff a team with CUDA or GPU programming experience, than any other specialized hardware device.

Cloud Integration

With GPUs being the most common specialized hardware device, all major cloud services offer managed GPU instances:

Amazon Web Services (AWS) - AWS offers a range of GPU instances (such as the P4, P3, and G4 families) designed for machine learning, high-performance computing, and graphics-intensive applications through its EC2 (Elastic Compute Cloud) service.

Google Cloud Platform (GCP) - GCP provides GPU-equipped virtual machines (VMs) with its Compute Engine and Kubernetes Engine services. Types of GPUs available include the NVIDIA Tesla T4, V100, P100, and K80 GPUs for various computing needs.

Microsoft Azure - Azure's NV and NC series virtual machines offer NVIDIA GPUs for high-performance computing, AI development, and graphics rendering, supporting a wide range of applications from deep learning to real-time rendering.

Disadvantages

Per-Unit Costs

The per-unit cost of high-performance GPUs can be prohibitive for some organizations. It’s an investment that requires careful cost analysis in the early stages of planning. It will need to be proven that the innovations gained from adopting this technology will outpace the upfront cost in the long run. Organizations must evaluate if an application requires advanced computing, and what features specifically require the use of GPUs.

Complexity

Programming for GPUs can be more complex due to the need for specialized frameworks and languages, such as CUDA for NVIDIA GPUs, which may present a steeper learning curve for developers accustomed to traditional programming environments. The power consumption and heat generation of high-end GPUs require more robust cooling solutions and energy considerations, potentially increasing operational costs in data centers and specialized computing environments. Development teams must be carefully chosen to enable this capability, and infrastructures must account for the difference in maintenance of a GPU vs traditional processors.

TPUs

Overview

Following GPUs, Tensor Processing Units (TPUs) were developed by Google, seeking to accelerate specific AI tasks, such as neural network computations, offering an optimized cost-performance ratio for such applications. Aimed at optimizing both the training and inference phases of deep learning models, particularly those using Google’s TensorFlow framework, TPUs represent a leap forward in accelerating AI research and application development, offering a unique proposition for businesses looking to leverage advanced AI capabilities.

Pros

Performance

For decision-makers prioritizing efficiency and scalability in AI initiatives, TPUs offer compelling advantages. Their high throughput and low latency enable rapid training and deployment of complex neural networks, dramatically reducing computational times and associated costs. TPUs are optimized for dense matrix computations, common in AI applications, providing an energy-efficient solution that can lower operational expenses.

Cloud Integration

Additionally, integration with Google Cloud services allows organizations to scale their AI projects with ease, without the need for substantial initial investments in physical infrastructure. This cloud-based model offers flexibility and accessibility, enabling companies to focus on innovation and speed to market.

Disadvantages

Limited Versatility

While a TPU’s specialized design is powerful for TensorFlow-based tasks, it has limited versatility across different machine learning frameworks, potentially constraining the scope of projects that can benefit from their capabilities. Furthermore, reliance on Google Cloud for TPU access introduces dependencies on external cloud infrastructure, raising considerations around data privacy, sovereignty, and the potential for network latency in time-sensitive applications.

Steep Learning Curve

The programming model for TPUs may present a learning curve, requiring developers to adapt to a less familiar development environment, which could impact project timelines. The development ecosystem around TPUs is significantly less mature than those of their GPU counterparts, so expertise or experience will be more difficult and expensive to accumulate than GPU expertise.

FPGAs

Overview

Field Programmable Gate Arrays (FPGAs) are highly versatile and customizable hardware devices that can be programmed to perform a wide array of computing tasks. Unlike fixed-function hardware such as CPUs, GPUs, and TPUs, FPGAs can be reconfigured post-manufacture to suit different applications and workloads, offering a unique blend of flexibility and performance. This reconfigurability makes FPGAs particularly appealing for applications requiring specific, optimized processing capabilities, including signal processing, data analysis, and certain AI and machine learning tasks.

Advantages

Reprogrammable

FPGAs contain the ability to be reprogrammed for different tasks, offering unmatched flexibility and allowing businesses to adapt the same hardware for multiple applications. Thereby one may extend its useful life and maximize ROI, without having to order new hardware.

High Performance, Low Latency

FPGAs are also known for their high performance in tasks that can be parallelized, offering efficient processing speeds that can rival those of GPUs in certain scenarios. Additionally, FPGAs offer low latency processing, making them ideal for real-time applications such as financial trading algorithms or embedded systems in automotive safety. Their energy efficiency is another critical advantage, as FPGAs can provide more computations per watt compared to general-purpose processors, potentially reducing operational costs in energy-sensitive deployments.

Smaller Form Factor

The smaller physical footprint of an FPGA compared to a GPU offers significant benefits, particularly in terms of flexibility and integration within various hardware environments. This compact size allows FPGAs to be easily incorporated into space-constrained applications, such as embedded systems, mobile devices, and IoT gadgets, enabling powerful, customizable computing capabilities without the bulk and heat management challenges associated with larger GPUs.

Disadvantages

High Complexity

The primary challenge in working with FPGAs is their complexity. Programming FPGAs requires specialized knowledge of hardware description languages (HDLs) such as VHDL or Verilog, which can steepen the learning curve for teams accustomed to software development. This complexity can lead to longer development timelines and potentially higher costs for training or hiring specialized personnel.

Upfront Costs

Additionally, the upfront cost of FPGAs and the tools required for their development can be significant, making them less accessible for smaller projects or startups with limited budgets. Moreover, while FPGAs offer excellent efficiency for specific tasks, they may not always provide the best performance-per-dollar ratio for general-purpose computing needs compared to CPUs or GPUs, especially in applications that do not fully exploit their reconfigurability and parallel processing capabilities.

Specialized Chiplets

Specialized AI chiplets are cutting-edge innovations designed to cater specifically to the computational demands of artificial intelligence and machine learning applications. Unlike traditional, monolithic processor designs, chiplets are smaller, modular processing units that can be combined in a single package to achieve desired performance characteristics. This modular approach allows for highly customized configurations that can be tailored to the specific needs of AI applications, ranging from neural network training to real-time inference tasks. The flexibility and scalability offered by AI chiplets represent a significant advancement in hardware design, enabling more efficient and powerful computing solutions.

Advantages

Extendability

The modularity of chiplets allows for rapid innovation and iteration, as new technologies can be integrated into existing systems without a complete overhaul, ensuring that AI infrastructure remains at the cutting edge. This approach significantly reduces development time and costs associated with deploying AI solutions.

Fine-Tuned Performance

Moreover, chiplets can offer superior performance and energy efficiency for AI tasks, as each chiplet can be optimized for specific functions, reducing unnecessary power consumption and improving overall system efficiency. The use of AI chiplets can lead to more compact and cost-effective hardware designs, enabling powerful AI computing capabilities in smaller form factors, which is particularly beneficial for edge computing applications where space and power are limited.

Disadvantages

Technical Barriers

One of the main disadvantages is the complexity involved in designing and integrating multiple chiplets into a cohesive system. This requires advanced technical expertise and may necessitate collaboration with chiplet manufacturers to ensure compatibility and optimal performance, potentially leading to higher initial development costs. Furthermore, the nascent state of the chiplet ecosystem means there are fewer established standards and protocols for integration, raising concerns about long-term support and scalability.

Supply chain Considerations

While chiplets allow for customized solutions, this customization can lead to difficulties in sourcing specific chiplet configurations and may limit the ability to quickly scale production in response to demand. The reliance on specialized components like AI chiplets could also pose supply chain risks, especially in times of global semiconductor shortages, affecting project timelines and budgets.

Optimizing Cost with Specialized AI Hardware

With an ever-changing industry and a wide variety of options, the choice of hardware for AI applications becomes a critical decision that impacts both performance and cost efficiency. Here are key considerations for selecting the right AI hardware:

Identify Your AI Workload Requirements: Different AI tasks require different computational resources. Training large neural networks, for example, might benefit more from the raw power of GPUs, while inference tasks might be more cost-efficiently handled by TPUs or specialized AI chiplets.

Consider the Total Cost of Ownership (TCO): Beyond the initial purchase price, consider the energy consumption, maintenance, and potential need for specialized cooling solutions. FPGAs, for instance, might offer lower ongoing costs due to their reconfigurability and energy efficiency compared to GPUs.

Evaluate the Ecosystem and Support: The maturity of the software ecosystem surrounding the hardware is crucial. GPUs benefit from a broad range of tools and libraries, such as CUDA for Nvidia GPUs, facilitating their use. The availability of developer tools, documentation, and community support can significantly impact development time and costs.

Flexibility and Future-Proofing: In a rapidly evolving field, the ability to adapt to new algorithms and models is key. FPGAs and modular chiplets offer a degree of future-proofing by allowing hardware to be reconfigured or updated without a complete overhaul.

Unlocking New Use Cases: Specialized AI hardware enables new applications that were previously unfeasible due to computational limitations. For example, edge computing devices equipped with AI chiplets can perform real-time data processing without the need to communicate with a central server, opening up new possibilities in IoT, autonomous vehicles, and remote sensing.

Wrangling a Rapidly Changing Field

The AI hardware landscape is in constant flux, with new technologies and updates emerging rapidly. Staying informed about the latest developments is crucial for making informed decisions about hardware investments. Acquiring the expertise to be able to execute these informed decisions with confidence and efficiency can be an expensive and time-consuming challenge.

Working with expert consultants, like those at SDV International can leapfrog the chasm of expertise preventing an organization from fully realizing their artificial intelligence goals. Through our cutting-edge decision-making processes, state-of-the-art expertise in AI hardware, and dedication to our clients, you can rely on us to provide critical knowledge in developing a lean, cutting-edge, cost-efficient solution.

Contact SDV INTERNATIONAL today at our website, by calling 800-738-0669, or by emailing info@SDVInternational.com.